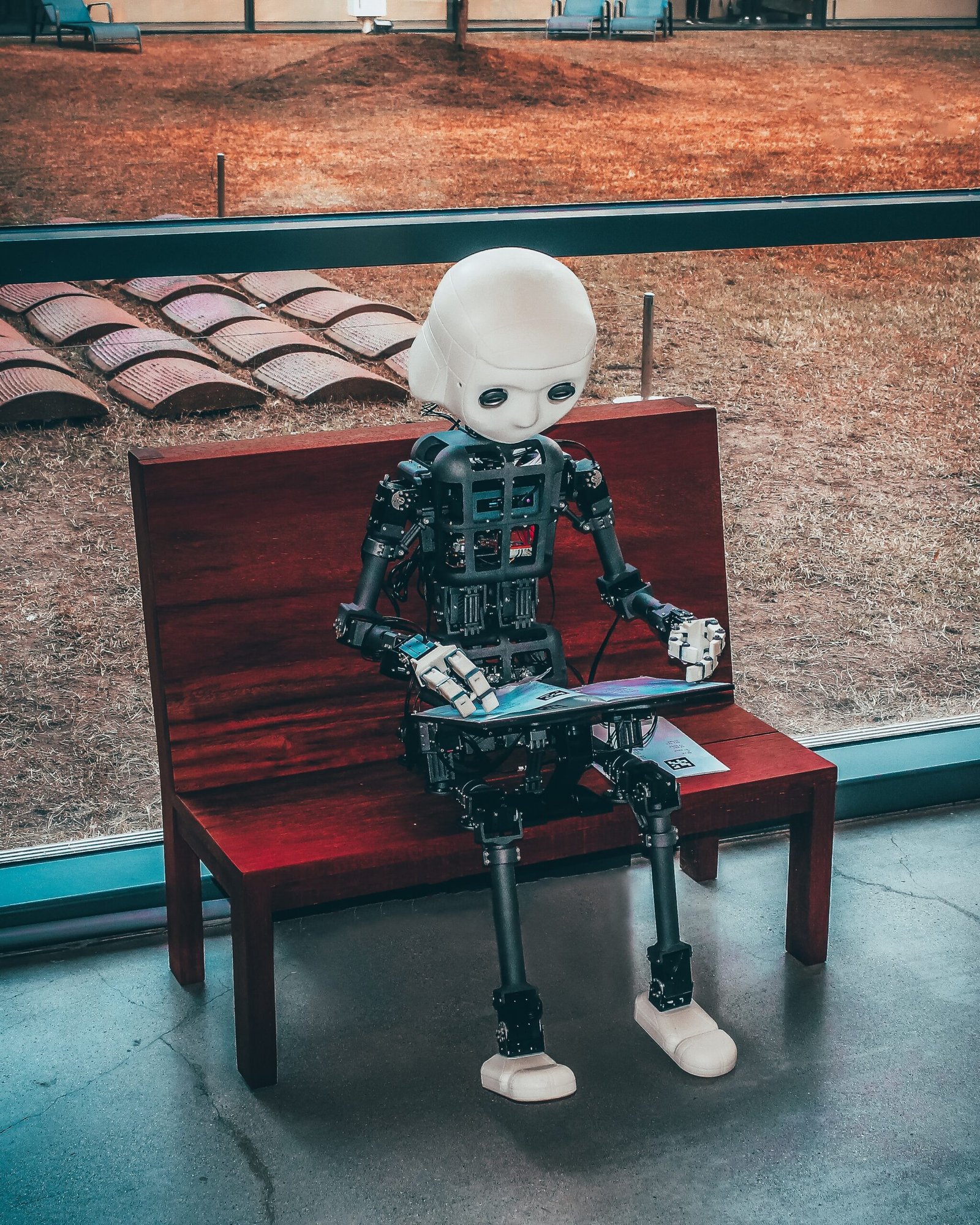

A new report reveals that teachers are reporting disciplinary actions for students who use generative artificial intelligence (AI) tools, such as ChatGPT. While there is concern among educators that these tools may be used for cheating, the report suggests that students are primarily utilizing them for personal issues rather than academic purposes. According to the survey, half of teachers stated that they knew a student who faced negative consequences for using or being accused of using generative AI. However, the majority of students who completed the survey claimed that they rarely use ChatGPT to cheat, but rather seek assistance with anxiety, mental health issues, and interpersonal conflicts. The report highlights the disconnect between teachers’ concerns and students’ actual usage, emphasizing the need for a better understanding of how generative AI tools are being utilized in the classroom.

Teachers Report Disciplinary Actions for Students Using AI

Read more about the Latest Money News

Introduction

The use of artificial intelligence (AI) tools by students has raised concerns among educators regarding disciplinary actions. A recent survey conducted by the Center for Democracy and Technology revealed that a significant number of students faced negative consequences for using or being accused of using generative AI, such as ChatGPT, to complete classroom assignments.

Concerns about Students Using Generative AI

Educators have raised concerns about the use of generative AI tools by students, fearing that it may lead to cheating. However, the survey results indicate that students are not primarily using AI for academic purposes. Instead, students are turning to generative AI for help with personal problems, such as anxiety, mental health issues, issues with friends, or family conflicts. This disconnect between teachers’ concerns and students’ actual use of AI tools can lead to an unnecessarily adversarial relationship between teachers and students.

Read more about the Latest Money News

Impact on Classroom Assignments

The survey reveals that half of the teachers surveyed reported disciplining students or imposing negative consequences for using generative AI. This proportion was even higher (58%) among teachers who work with special education students. The concerns about cheating are evident, as 90% of teachers suspect that students are using AI tools to complete assignments. However, students themselves reported that they rarely use ChatGPT to cheat, highlighting the disconnect between teachers’ suspicions and students’ actual intentions.

Disconnect Between Teachers and Students

The survey findings highlight a significant disconnect between teachers and students when it comes to generative AI usage. While teachers expressed distrust and suspicion towards students, a majority of students reported using AI tools for personal reasons rather than academic purposes. This disconnect can create an adversarial relationship between teachers and students, leading to misunderstandings and disciplinary actions based on false assumptions.

Lack of Guidance and Training

The survey also indicates that there is a lack of guidance and training for both parents and teachers on how to navigate the use of generative AI tools. Only 40% of parents reported receiving guidance on the appropriate use of AI tools without violating school rules. Additionally, only 24% of teachers reported receiving training on how to respond if they suspect a student has used AI to cheat. The lack of guidance and training exacerbates the disconnect between teachers and students and contributes to disciplinary actions based on misunderstandings.

Rising Digital Privacy Concerns

Apart from concerns about generative AI, the survey revealed a sharp increase in digital privacy concerns among students and parents. 73% of parents reported being concerned about the privacy and security of student data collected and stored by schools, a significant increase from the previous year’s 61%. Similarly, 62% of students expressed data privacy concerns, compared to 57% in the previous year. This increase in concerns is likely due to the growing frequency of cyberattacks on schools, which have made student records vulnerable to breaches.

Increased Anxiety Due to Cyberattacks on Schools

Cyberattacks on schools have become a primary target for ransomware gangs, compromising sensitive student records. The exposure of student psychological evaluations, reports on campus rape cases, disciplinary records, and other confidential information has heightened anxiety among students and parents. The survey found that students in special education and their parents were more likely to report concerns about school data privacy and security. One in five parents reported being notified of a data breach at their child’s school, further contributing to heightened apprehension.

Sensitive Student Records at Risk

The survey results highlight the vulnerability of sensitive student records to cyberattacks. Student records containing confidential and highly sensitive information are at risk of exposure, which can have severe consequences for students and their families. The breach of student records compromises privacy, potentially leading to identity theft, psychological distress, and discrimination.

Growing Worry about Digital Surveillance Technology

The use of digital surveillance technology in schools has raised concerns among students, parents, and educators. Student activity monitoring tools, which rely on artificial intelligence algorithms, aim to ensure students’ safety but can disproportionately impact certain groups based on race, disability, sexual orientation, and gender identity. Additionally, the survey found that over a third of teachers reported schools monitoring students’ personal devices, raising concerns about the extent of digital surveillance within educational settings.

Disparate Impacts on Students Based on Race, Disability, Sexual Orientation, and Gender Identity

The survey suggests that digital surveillance technology and AI-powered monitoring tools can result in disparate impacts on students from marginalized communities. Black parents expressed greater concern about information obtained from online monitoring tools falling into the hands of law enforcement. Filtering and blocking online content also disproportionately impact LGBTQ+ youth, potentially violating federal civil rights laws and impeding their access to education.

Concerns about Filtering and Blocking Online Content

The survey findings also highlight concerns regarding the filtering and blocking of online content in schools. Digital tools used to restrict certain online material can effectively amount to a digital book ban, limiting students’ access to diverse perspectives and information. Approximately three-quarters of students reported that web filtering tools prevented them from completing school assignments, with LGBTQ+ youth disproportionately affected.

Violation of Federal Civil Rights Laws

The survey’s observations of disparate impacts based on race, sex, disability, and sexual orientation raise concerns about potential violations of federal civil rights laws. Schools have a legal responsibility to prevent discriminatory outcomes and ensure equal access to education. The coalition of civil rights groups, in a letter to the White House and the Education Secretary, called for increased regulation and accountability to address potential threats to students’ civil rights posed by ed tech practices.

Advocacy for Stronger Regulations on Ed Tech Practices

The Center for Democracy and Technology, along with a coalition of civil rights groups, has urged federal officials to take stronger action to protect students’ civil rights in light of the survey findings. The coalition emphasized that existing civil rights laws require schools to be accountable for their conduct and that of the companies they partner with. The advocates called for increased transparency, stronger protections for sensitive student data, and guidelines to ensure that AI and digital surveillance technology do not contribute to discrimination or hinder access to education.

In conclusion, the survey’s findings indicate the need for a more nuanced understanding of students’ use of generative AI tools and the potential impact on disciplinary actions. It also highlights the growing concerns about digital privacy, cyberattacks on schools, and the disparate impacts of AI and digital surveillance on marginalized student populations. To address these concerns, stronger regulations and guidelines are necessary to protect students’ civil rights and ensure their access to an equitable education.